5 September

Programming as a Backstage of VR Development

3D

Design

Programming

min. read

Introduction

Let’s start with the fact that the virtual reality branch is gaining more and more interest, and consequently – applications. It’s no longer associated only with various entertainments, but increasingly often we meet such slogans as: a VR product development, real estate in VR and many more.

Here, however, we will deal with the other side of this coin – not the visual and not the applications of VR as such, because such topics were described many times. We will focus on the essential technical stage then, which is the use of programming languages to allow immersive experiences to exist. Also, you can discover some examples of code snippets used in virtual reality. Let’s get to the gist.

Tools to choose for VR development

The right development tools are crucial, as in every branch. Among the most common for VR development, we can mention two technologies commonly recognized as game engines: Unity and Unreal Engine. In fact, both of them offer VR libraries.

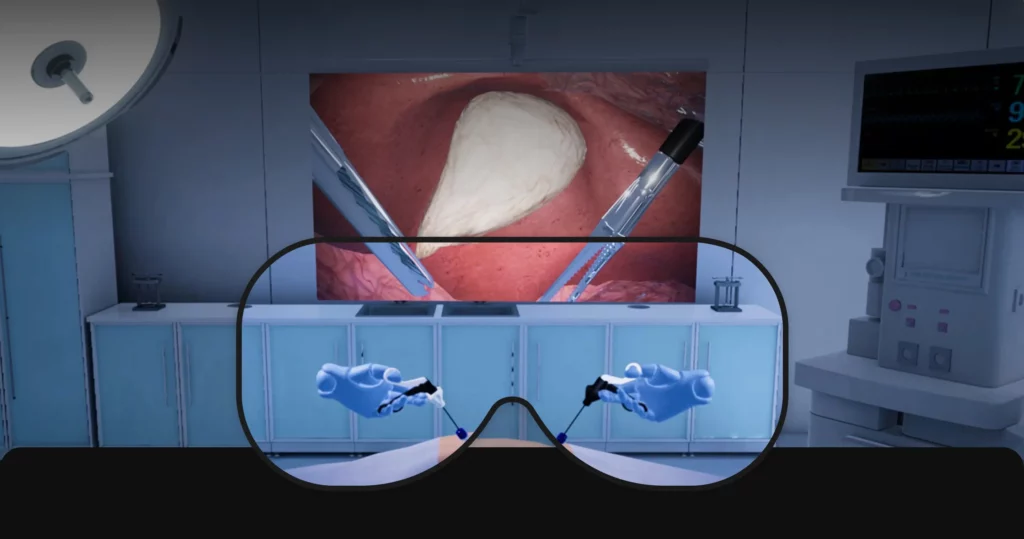

The gaming industry is of course the first field that most often comes to mind when talking about VR experience (just like AR – augmented reality). However, both these areas have already developed so intensively that the applications reach also real estate (house VR), tourism, manufacturing, marketing (product performance), military and defense, retail, healthcare (medical VR), education and much more… So VR games are no longer a main area.

What about coding language?

The division of languages commonly used while running engines are: C# and Javascript for Unity and C++ for Unreal Engine. This second also uses its visual scripting language – Blueprint – more friendly for those who are just starting their journey with Unreal Engine and don’t really have a strong programming background. In fact, Blueprint doesn’t demand writing any line of code, because it’s a complete system based on the idea of using a node-based interface to create elements from within Unreal Editor. But let’s skip into programming itself.

What is coding in VR for?

We should start with what VR programming means. It can be described as a process of programming software apps or experiences for use in the virtual environment, which can be received through VR devices such as VR headsets or sensors.

Elements that can be programmed in VR are:

User Interface

Preparing interactive menus, buttons, and other interface elements that the user can interact with in the VR environment.

Objects interactions

The crucial thing is programming the way that users may interact with objects. It includes picking up things, moving or using different objects in various ways.

Environment design & effects

Here we have coding the physics of the environment, for example gravity and collision detection. It also includes programming changes in lighting, weather conditions (snow, rain, wind) and day/night cycles.

Character design (especially in game development)

This is programming the movements and interactions of characters in the environment, including AI programming for non-player characters.

Sounds

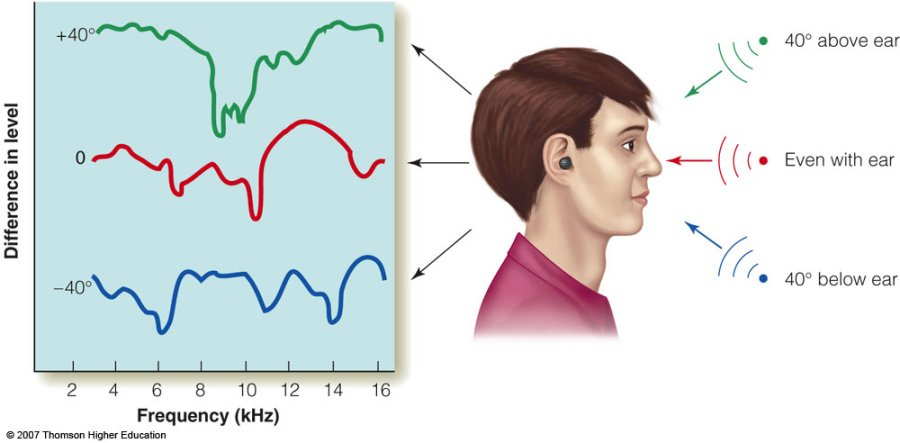

This part includes spatial audio, which in a nutshell is a simulation of a real sound. This concept is very complex, but coding is one of the key factors to build this section.

Among the sound-related tasks performed by programmers in VR development are:

- Simulation of real-world sounds

It’s like copying how sound behaves in the real world. Coders can create algorithms that accurately imitate the directionality and distance of sound sources.

- 3D positional audio

Programming here is used for adjusting volumes/frequencies which are based on the location of the observer in relation to the sound source. The tone shifts with the viewer’s movement, ensuring a sense of direction and distance.

- Head tracking

VR systems use head tracking to adapt sound according to the position of the viewer’s head. This part requires coding to combine the audio system with the head tracking system, so that the sound changes in real time as the viewers move their head.

- Binaural audio

Binaural audio creates the impression of 3D sound using only two channels – left and right ears. The coding is used to implement the head-related transfer function (HRTF) – a complex mathematical function that simulates how a given sound wave (frequency, direction) differs as it reaches each ear. It’s amazing how developers can simulate the subtle sound changes that occur, giving the perception that the sound is coming from a specific point in the virtual 3D space.

- Ambisonics

This is a surround sound technique that allows sound to be emitted from any direction. Coding is also pivotal here.

- Environmental effects

Creating environmental effects like reverberation or occlusion are also done with the help of programmers.

So, developing spatial audio is very complex and it includes using different programming languages, tools and libraries. C++, Javascript, Web Audio API and specialized audio engines like FMOD or Wwise are the core.

Animation & effects

Special effects (for example explosions, fire, water, smoke) and animations (lifelike movements for characters/objects) – this could entail using motion capture data or sophisticated animation algorithms.

Navigation & movement

It’s also a programming contribution on how the user can move in a VR environment – running, jumping, walking, teleportation etc.

Multiplayer functionality

It means programming the ability for multiple users to interact in the same VR environment.

Haptic feedback

Coders can program haptic feedback to improve the immersion of an experience. It involves creating sensations of touch and force feedback to match the visual experience.

What should you also understand as a programmer for VR?

Programming itself is not enough to operate in this field. Coding in such a branch also requires knowledge of 3D modeling, computer graphics, Ai and UI design.

Strictly related and important points to learn in order to effectively code in VR:

- Understanding VR basics

You don’t have to be a VR wizard, but VR programming demands a fundamental understanding of this field, so there is no option not to know such things as differences between 3-DOF (degrees of freedom) and 6-DOF, types of VR (mobile, PC/console, standalone) and knowledge about VR hardware.

- VR SDKs

Software Development Kit is an advanced dictionary with additional words and sentences that you can use to speed up your work. There are several SDKs dedicated to VR development: Unity’s XR SDK, Google VR SDK, Oculus SDK, etc. Each of them provides APIs and libraries prepared specifically for VR coding.

- 3D modeling and graphics

From this extensive field, programmers should have knowledge about vertices, materials, textures, lighting, shaders and other key elements of 3D development.

- Engines

Unity and Unreal Engine have built-in support for VR and ensure a visual editor along with scripting languages.

- Physics

Understanding the principles of physics will absolutely help in building immersive experiences. It includes knowledge about things such as the way objects are reacting for movement. For example, if somebody throws an object, it should move and fall in a certain direction, as in the real world.

- UI & user interaction

A general idea about interaction is useful insofar as the programmer helps develop gaze-based controls, hand controllers, or even full-body tracking.

- Performance optimization

Optimizing for the best performance demands managing CPU/GPU usage, optimizing rendering and handling memory efficiently.

- Networking & multiplayer

Regarding the game industry, programmers should know concepts such as server-client architecture, handling latency, syncing players movements etc.

- Testing & debugging

Testing VR apps is challenging, because of the immersive nature of this technology, so coding demands developing strategies to later effectively test and debug within the VR environment.

Examples of code snippets

To somehow illustrate some actions in terms of programming, it is useful to show code excerpts relating to specific actions.

When it comes to the C# programming language and actions in Unity game engine, the code might look like this, for example:

- grabbing an object

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Valve.VR;

public class GrabObject : MonoBehaviour

{

public SteamVR_Action_Boolean grabAction;

private GameObject collidingObject;

private GameObject objectInHand;

private void SetCollidingObject(Collider col)

{

if (collidingObject || !col.GetComponent<Rigidbody>())

{

return;

}

collidingObject = col.gameObject;

}

public void OnTriggerEnter(Collider other)

{

SetCollidingObject(other);

}

public void OnTriggerExit(Collider other)

{

if (!collidingObject)

{

return;

}

collidingObject = null;

}

private void Grab()

{

objectInHand = collidingObject;

collidingObject = null;

var joint = AddFixedJoint();

joint.connectedBody = objectInHand.GetComponent<Rigidbody>();

}

private FixedJoint AddFixedJoint()

{

FixedJoint fx = gameObject.AddComponent<FixedJoint>();

fx.breakForce = 20000;

fx.breakTorque = 20000;

return fx;

}

private void Release()

{

if (GetComponent<FixedJoint>())

{

GetComponent<FixedJoint>().connectedBody = null;

Destroy(GetComponent<FixedJoint>());

objectInHand.GetComponent<Rigidbody>().velocity = Valve.VR.InteractionSystem.Player.instance.hmdTransform.TransformVector(SteamVR_Actions._default.InActions.Pose.GetVelocity());

objectInHand = null;

}

}

void Update()

{

if (grabAction.GetLastStateDown(SteamVR_Input_Sources.Any) && collidingObject)

{

Grab();

}

if (grabAction.GetLastStateUp(SteamVR_Input_Sources.Any))

{

Release();

}

}

}And C++ programming language using Unreal Engine:

- moving an object

#include "MoveObject.h"

#include "GameFramework/Actor.h"

// Sets default values

AMoveObject::AMoveObject()

{

// Set this actor to call Tick() every frame

PrimaryActorTick.bCanEverTick = true;

}

// Called when the game starts or when spawned

void AMoveObject::BeginPlay()

{

Super::BeginPlay();

}

// Called every frame

void AMoveObject::Tick(float DeltaTime)

{

Super::Tick(DeltaTime);

// Get the current location

FVector Location = GetActorLocation();

// Move the object up and down

Location.Z = FMath::Sin(GetGameTimeSinceCreation()) * 100;

// Set the new location

SetActorLocation(Location);

}As this code creates an Actor in Unreal Engine (a basic object), it then moves this object up and down in a sine wave pattern. It could be used to create a floating object in VR.

It’s worth noting that for more complex VR applications, it’s necessary to use VR SDK to enable functions and classes for interacting with VR hardware, tracking head and hand movements, rendering stereo images, and so on. In that case, however, code for such applications would be more composed.

Conclusion

Well, so we’ve reached the conclusions. What is certain is that programming in the development process is a very versatile profession requiring knowledge from many areas of design, but also extensive understanding of the user.

We managed to describe this wide field by explaining the basics of VR programming, its tools and the complexity of its components. Code snippets are only a drop of knowledge, but useful to have at least some insight into the nature of such activities. VR experiences are absolutely multi-component topic. We will certainly write more on this subject. Stay tuned!

Let's talk

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.