8 November

In-Camera VFX: Creating Advanced Visuals in Unreal Engine

3D

Business

Design

min. read

Introduction

Let’s start by saying that In-Camera VFX (ICVFX) is now a cutting-edge, innovative, real-time method of recording visual effects. The technique is based on a combination of LED lighting, live camera tracking, real-time rendering, and off-axis projection.

Its primary goal is to prevent the need for green screen compositing to achieve final pixel results in-camera. Using real-time rendering engines and state-of-the-art LED displays; cutscenes can be filmed at the time of the main shots, while conventionally, they would instead evolve through a lengthy production process.

In this article, we will delve into ICVFX in the context of creating advanced visuals in Unreal Engine, giving an example of its use in the automotive industry. Also, we’ll explain some essential technical parts of this process to help you better understand the technique we discussed.

Why is real-time technology so popular?

A lot of sectors are occupied by real-time technology now. The reasons are many. Fast processing and delivery of information are extremely useful features.

Among the most significant others, we can mention:

- Instant feedback

With immediate feedback and updates, you can make quick decisions based on the most up-to-date information.

- Increase of productivity

In the production industry, real-time tracking and monitoring can help you quickly identify and resolve problems, reducing downtime and improving production efficiency.

- Enhanced user experience

In the context of web apps, real-time technology can significantly improve the user journey by delivering immediate responses and making interactions smooth, seamless, and fast.

- Accuracy and punctuality

In sectors such as finance, healthcare, or emergency services, the accuracy and punctuality of information may be critical. Real-time technology ensures that the most current and accurate information is always available.

LED walls – a revolution in virtual production

The existence of in-camera effects was possible thanks to mirrors, forced perspective, painted glass, and others. Now, LED walls are an innovation that allows skipping the traditional post-production stage, where adding the background and effects to the scene was done after filming actors and objects in front of a green screen.

This method, therefore, allows rendering a 3D environment behind actors, products, or the physical set so you can see the final (photorealistic) scene in real-time instead of waiting for the effect achieved in post-production.

Technical parameters of LED panels

The design of the LED stage and its purpose are an integral part of the in-camera VFX setup. The number of LED panels needed and how they are placed affects the rest of the hardware configuration. Such panels can be arranged in an arc around an actor or an object to ensure better ambient and reflective lighting. It also has usability for providing an LED ceiling, contributing to ambient lighting and reflections across the whole stage.

Let’s take up the topic of strictly technical aspects of LED panels and start by saying that a cluster of cabinets makes up a panel. Each of these cabinets has a fixed resolution, ranging from very low (such as 92×92 pixels – used for outdoor signs) to higher (400×450 pixels – very high-resolution indoor signs). The physical size varies depending on the manufacturer.

The next thing is the LED processor – the hardware and software that combines many cabinets into an array that displays a singular image. The cabinets can be arranged according to any configuration within the canvas operated by the LED processor. For example, a large LED scene can have 10 or more LED processors controlling a single LED wall.

Camera tracking methods with in-camera VFX

The relaying of camera position and movement from the real world to the virtual world is achieved through camera tracking. Thanks to this technology, the proper perspective of the production camera in relation to the virtual environment is rendered.

Several different methods of camera tracking in ICVFX can be distinguished:

- Optical tracking – such a system uses specialized IR-sensitive cameras to track reflective or active IR markers to identify the location of a production camera.

- Feature tracking – this option relies on identifying certain image patterns of real objects as a tracking source. This is a great replacement instead of tracking custom markers which optical tracking systems use.

- Inertial tracking – IMUs (Inertial Measurement Units) include a gyroscope and accelerometer used for defining camera position and orientation. IMUs are often used in both systems – feature and optical tracking systems.

How does the Unreal Engine support ICVFX?

It’s crucial to use a game engine to render virtual backdrops into LED panels and the real-time rendering is key here, because when someone or something is moving, the background (used with motion tracking cameras) can also move smoothly.

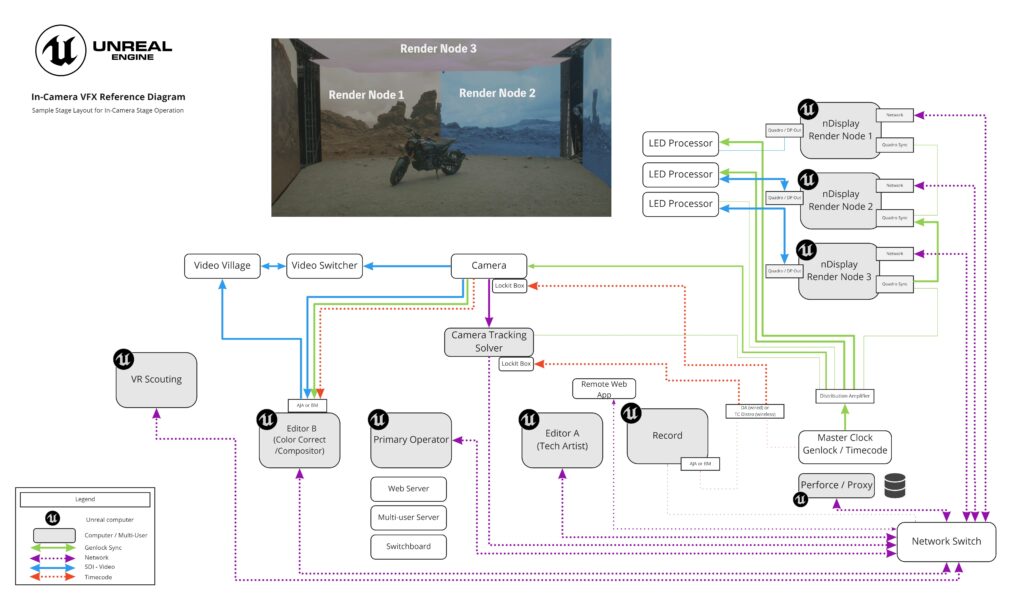

Thanks to many systems, such as nDisplay, Multi-User Editing, Live Link, and Web Remote Control, Unreal Engine supports ICVFX.

- nDisplay/render nodes

Each rendering node handles a piece of the LED volume – each requiring an NVIDIA GPU together with an NVIDIA Quadro II sync card. Thanks to nDisplay it’s also possible to synchronize all devices according to internal clocks. Camera, computers and tracking system won’t be unified with each other if genlocking with nDisplay didn’t exist. Note that even 2 exactly the same devices having an internal clock don’t have them identically synced.

- Multi-user editing

This system allows robust collaboration to handle any type of changes. The main operator machine is responsible for scene modifications and updating live nDisplay rendering machines. In the same multi-user session, you can have multiple operator machines that can carry out various tasks and modify the scene in real-time. To connect different computers to the multi-user editing session, you should have the same version of Unreal Engine installed and each device must have a copy of the project with exactly the same content.

- Live Link

This is a framework in Unreal Engine, which ingest live data. For ICVFX, Live Link plays a huge role by distributing the tracked camera information. It can also work with nDisplay to bear the tracking information to cluster nodes.

- Remote Control for ICVFX

Having so many machines involved in ICVFX shoot leads to the necessity of controlling the scene in real-time. Remote Control is a helpful web app written with HTML, CSS, and Javascript. It can remotely control scenes from a device with a web browser with the possibility of changing colors, lighting, and virtual objects or actors’ positions.

ICVFX hardware recommendations for setup with Unreal Engine

ICVFX may require a complex network of interconnected devices with different functions on set.

WORKSTATION

Consider space and power for data storage. The workstation should support NVLink if you want to utilize multi-GPU.

CPU

Commonly known CPUs for using ICVFX are: AMD Ryzen 9 3950X, Intel Xeon, Intel Core i9 processors and AMD Threadrippers. When choosing processors, it’s worth betting on faster clock speeds rather than a higher number of cores. It is recommended that a clock speed of at least three gigahertz (Ghz) be the starting point.

GPU

According to GPU it’s good to get familiar with NVIDIA GeForce RTX graphics cards – especially those who utilize ray tracing and other various rendering techniques in Unreal Engine. To synchronize displays over the entire LED volume, NVIDIA Quadro Sync is required.

RAM

For most ICVFX scenarios, 64GB of DDR-4 memory is recommended. If the production predicts using very large files, additional RAM could be needed then.

STORAGE

Project data is available on every computer, so fast local storage is essential for the best performance. It’s recommended to use M.2 Solid-State Drives (SSDs) as additional data drives from the computer’s boot drive.

VIDEO CARD

If your project includes the use of live greenscreen compositing, you will need an SDI video card to support camera input, composition output, and timecode synchronization.

STORAGE NETWORK CARD

What about maintaining fast data transfer among operator systems and render machines? In that case, it’s recommended to use a 10-Gigabit Ethernet (GbE) Network Interface Controller (NIC).

Automotive usage

Automotive sector isn’t an exception among other industries deriving benefits from real-time technology. Due to this, there is a possibility to process data and enhance not only vehicle simulations but also AR features within cars.

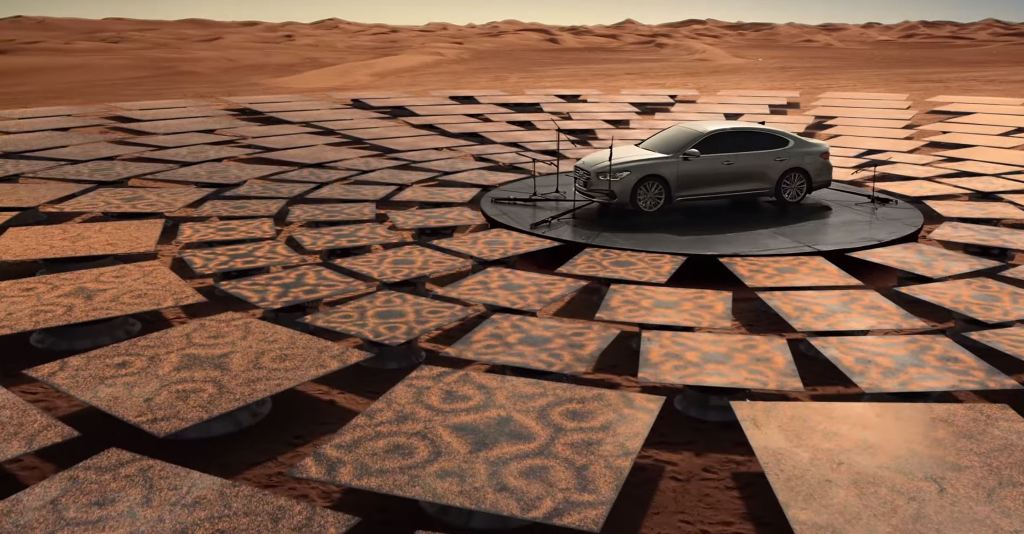

The ability to visualize in real-time and create environmental simulations in the automotive industry is also changing the approach to the design, testing and promotion process itself. The holistic approach draws assets from the traditional automotive design and testing phases while combining them with virtual production. It is not only the quality but also the efficiency of such processes that makes us realize how big a step forward this approach is. Also worth mentioning is the importance of implementing vertically integrated pipelines that respond to changing requirements in the automotive industry.

What is possible when taking the automotive branch into account then? A lot of car manufacturers have in mind showing their models in environments such as beaches, deserts, mountain roads and other amazing circumstances and in fact all of them are really effective, commercial backgrounds, so being able to create a library or assets of such locations leads to incredible efficiency. Another issue is that with ICVFX, there is a possibility to film cars in non accessible locations (Arctic Circle for example). Instead of doing that on a greenscreen, specialists can recreate such an environment digitally. Each place unavailable for organizing a full production (crew, equipment, cars, etc.) can be recreated utilizing the Unreal Engine.

Security & control of vehicles

Capturing cars in public (especially those unannounced or with a “code red”) in traditional approach was sometimes hard and it was entailed with waiting for legal permits. Also, it was very difficult to organize it with full control over security. Possibilities of the appearance of an unauthorized person existed too. So, the era of ICVFX finished these issues.

It is also worth mentioning the dynamic specifics of creating shots for automotive commercials. Scene verification for driving content has some subtleties that are different from other types of volume content. However, this is a topic for a separate material treating in detail how vehicles are captured and pathtracing.

Conclusions

We discussed the technical aspects of working with ICVFX technology, which provide a concrete introduction to the subject. Using the automotive industry as an example, we were able to demonstrate which elements not achievable through standard green screen shooting are realizable with ICVFX. The technology has a whole host of nuances, which we will describe further.

It can be said that real-time technology has revolutionized the market. The seamless integration with Unreal Engine is a huge additional opportunity that drives the best possible results. Stay tuned for more content!

Let's talk

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.