18 January

Deploying Nginx as an API Gateway – Tips & Functionalities

Programming

Web apps

min. read

Introduction

Companies are increasingly leveraging Application Programming Interfaces (APIs) to connect systems, streamline operations, and offer innovative services. But as the complexity and number of APIs grow, managing them becomes a daunting task.

This is where the API Gateway comes into play, but in the diversity of API Gateways, it’s hard to choose the best one that fits your requirements. Sometimes, diving deeper into this topic is necessary to find the best option.

In this article, however, we discuss one of the best choices in the context of the range from simple to complex features – Nginx. We provide tips regarding configuration as an API Gateway in the version of Nginx PLUS to be the basis for production development. We go through the list of not natively supported features of free Nginx that make this version not fully functional in the context of using it as an API Gateway. Also, we cite available functionalities and the benefits of deploying them to help you decide if they fit your needs.

Understanding the concept of an API Gateway

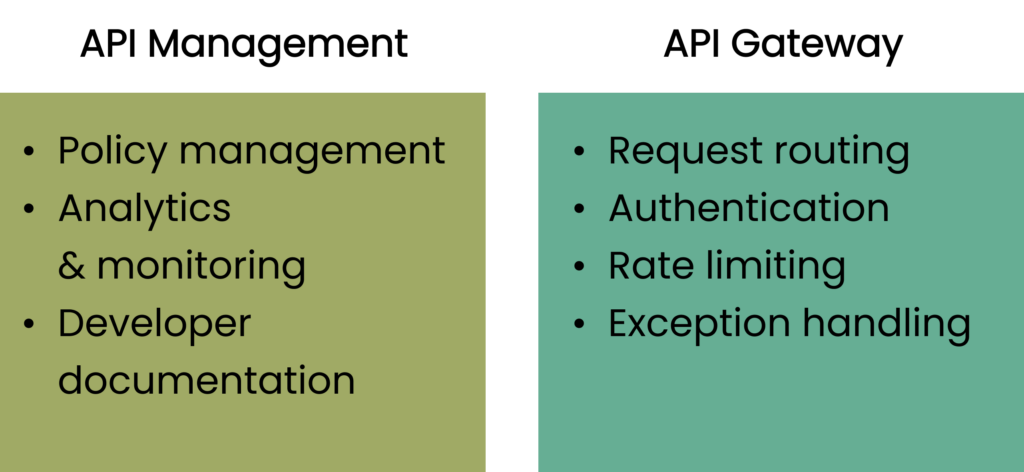

An API Gateway is a server that acts as an intermediary for processing API requests between clients and services. Essentially, it enforces all API traffic, handling the requests and responses on behalf of the client and the microservice. It works as a reverse proxy to accept all application service calls, aggregate the various services required to fulfill them and return the appropriate result.

What is Nginx and what does it offer?

Nginx is software that works as a reverse proxy and can be utilized as a load-balancer at the same time, instead of hardware load-balancers (helping to reduce costs), as well as a single point entry for backend applications. Being very popular in various areas, Nginx as a high-performance API Gateway is lightweight and can be deployed across the cloud, edge environments, and on-premises. Nginx can handle web traffic by translation across protocols: HTTP/2, HTTP, FastCGI, and uwsgi.

These features make Nginx a great and flexible enough platform with which you can build your API Gateway. Many CDNs use it for caching data at edge locations. Also, the significant part is that you can pick only the features you need for the start, and after your product evolves, you can upgrade it. If, however, you need a complete plan and don’t want to use additional plugins, you can choose Nginx PLUS. The open-source version of Nginx will also work if you integrate modules/plugins.

Nevertheless, we will use Nginx PLUS as an example when it comes strictly to configuration.

Configuration of Nginx PLUS as an API Gateway

Below we describe the general process of deploying Nginx PLUS as an API Gateway (for a RESTful API communicating by JSON).

- Installation of Nginx PLUS

Detailed instructions regarding the installation are described on Nginx website.

- Define the API endpoints

API endpoints are the points of interaction or communication between an API and a server. Defining them in Nginx PLUS involves specifying the various URIs or paths clients can use to access and manipulate resources.

This could mean specifying the HTTP methods (GET, POST, PUT, DELETE, etc.) that can be used and what actions they should trigger on the server side.

- Configure the API Gateway

Here, you are setting up Nginx PLUS to act as an API Gateway. This task involves configuration leading to managing API requests and responses, routing requests to the appropriate services, handling errors and exceptions, etc. It can also mean setting up security matters such as API keys, OAuth tokens, rate limiting, etc.

- Test the API Gateway

This part is about verification if API Gateway works as expected. This can include sending test requests to the defined endpoints and checking that the responses are as anticipated. It can also involve performance testing to ensure that the gateway can handle the expected load, as well as security testing to ensure that the gateway properly protects the underlying services.

- Deploy

This point encompasses the process of launching the API gateway into a live or production environment. It involves ensuring that the gateway is properly integrated with the other components of the system, that it is correctly configured for the production environment, and that all necessary monitoring and logging tools are in place. This step is crucial to ensuring that the API gateway is ready to handle real traffic and provide a robust and reliable service.

Not supported for API Gateway features of free version of Nginx

As we mentioned before, if you want to stick with the free version of Nginx, using it as an API Gateway will require additional modules and plugins. Basic open-source version still can be used as a reverse-proxy, load balancer and can serve static content, but for building API Gateway it has limitations. This is because some functions typically associated with API Gateway, such as API versioning, rate limiting, key authentication, and OAuth integrations, are not natively supported by the open-source version.

However, some of these functionalities can be obtained by integrating additional open-source modules or plugins. For example:

- Auth Request Module

This module can help with handling authentication

- Lua Module (OpenResty)

This module allows embedding of Lua scripts into Nginx, which can be used to handle more advanced use cases, including custom authentication or rate limiting.

Also, remember that each module requires additional configuration management effort. Depending on your needs and complexity of APIs, it’s important to choose the option that will not add too much unnecessary work.

Functionalities of an Nginx API Gateway

TLS Termination

- listen directive with SSL flag

- Provide location of key & certificate

- Define SSL protocols to support

- ssl_ciphers to enable

Nginx enables termination of TLS at the gateway. When a HTTP request comes in, you terminate it at the gateway and the request going to that point forward could be a HTTP, or you can invoke another HTTPS connection. SSL certificate and the key can be stored on a disk.

Request Routing

- nested locations

- location (URI) matching

Exact (=)

location = /api/f1/seasons {

proxy_pass http: //f1-api;

}Regex (~)

location ~ /api/f1/[12][0-9]+ {

proxy_pass http: //f1-api;

}Prefix ( )

location /api/f1/drivers {

proxy_pass http: //f1-api;

}- policies apply at any level

Requests are forwarded to the appropriate service. It routes client requests to the correct services based on the request path, method, and other parameters.

Authentication and Authorization

JSON Web Tokens (JWTs) are more and more used for API authentication. However, native JWT support relates to Nginx PLUS.

- auth_jwt directive (each request routed to the endpoint is verified against the local .jwk)

location = /api/f1/circuits {

auth_jwt on;

auth_jwt_key_file /etc/nginx/api_secret.jwk;

proxy_pass http://f1-api;

}Typically, a shared secret, commonly known as an API key, is used to verify the identity of an API client (the remote client software that requests API resources). The conventional API key acts like an intricate, lengthy password sent by the client as an extra HTTP header with every request. If the provided API key matches one in the list of valid keys, the API endpoint allows access to the requested resource. It’s important to note that the API endpoint usually doesn’t perform the validation of API keys.

Instead, this task is typically managed by an API gateway, which also directs each request to the suitable endpoint. This process not only reduces computational load but also offers the advantages associated with a reverse proxy, including high availability and load balancing across multiple API endpoints.

Load Balancing

- sticky session as a method

Each request is routed to the server which initially served the request

- all Nginx load balancing methods are applicable

upstream f1-api {

server 10.1.1.4:8001;

server 10.1.1.4:8002;

sticky cookie srv_id expires=1h;

}In the above example, we have 2 backends and Nginx is load balancing against them and every time when a request appears and it’s routed to a specific endpoint, Nginx creates a cookie which is sent back in the response.

So Nginx helps in balancing loads between different service instances. It routes requests to various instances of a microservice, thereby ensuring equal load distribution and preventing any single instance from becoming a bottleneck.

Rate Limiting

Nginx uses limit request zone directive to apply specific rate limiting. It’s used in the HTTP context, but outside the server context. We can have more than just one rate limit request zone and multiple rate limit configurations applied to them.

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=mylimit;

proxy_pass http://my_upstream;

}

}In the above example rate limiting is configured with 2 main directives: limit_req_zone (defines the parameters for rate limiting) & limit_req (enables rate limiting within the context where it appears (in the example, for all requests to /login/).

Caching

API Gateways can cache responses from service instances to improve latency and reduce the load on services, especially for frequently accessed read data.

Benefits of an API Gateway

The API Gateway offers numerous benefits such as:

1. Simplified client interaction – by presenting a single, unified API to the client, the API Gateway simplifies interaction with the back-end services.

2. Enhanced security – by handling authentication and authorization, the API Gateway adds an extra layer of security, protecting back-end services from unauthorized access.

3. Improved performance – through caching and load balancing, the API Gateway significantly improves the performance of service interactions.

4. Centralized management – the API Gateway centralizes the management of services, making it easier to monitor and control the whole API ecosystem.

Conclusion

We’ve gone through the most important functionalities of Nginx as an API Gateway and the benefits and critical tips to apply when dealing with API Gateway configuration.

In the era of microservices and cloud-based applications, the API Gateway plays a crucial role in managing and securing service interactions. By acting as a single entry point for all client requests, it not only simplifies client interactions but also enhances system performance and security. As APIs continue to grow in popularity and complexity, the role of an API Gateway will become increasingly important in organizations’ digital transformation journey.

Tools like Nginx can significantly influence the quality of managing your APIs and streamline operations. If you want to check out how we ensure the best performance of our 3D web apps, thanks to Nginx, you can read an article via our product’s dedicated page.

Let's talk

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.

I agree that my data in this form will be sent to [email protected] and will be read by human beings. We will answer you as soon as possible. If you sent this form by mistake or want to remove your data, you can let us know by sending an email to [email protected]. We will never send you any spam or share your data with third parties.